- plsql developer 一键格式化sql美化sql

- SpringBoot 解决跨域问题的 5 种方案!

- Nginx模块之rewrite模块

- 投springer的期刊时,遇到的一些latex模板使用问题

- Java实战:Spring Boot 实现文件上传下载功能

- 【Linux】nmcli命令详解

- 浅谈Zuul、Gateway

- AI大模型探索之路-应用篇17:GLM大模型-大数据自助查询平台架构实

- AI时代的Web开发:让Web开发更轻松

- SpringBoot部署一 Windows服务器部署

- 深入理解深度学习:LabML AI注解实现库

- navicate远程mysql时报错: connection isbe

- 【微服务】接口幂等性常用解决方案

- 【Rust】——采用发布配置自定义构建

- pytorch超详细安装教程,Anaconda、PyTorch和PyC

- 分布式系统架构中的相关概念

- tomcat默认最大线程数、等待队列长度、连接超时时间

- MySql基础一之【了解MySql与DBeaver操作MySql】

- 深度解析 Spring 源码:三级缓存机制探究

- 输了,腾讯golang一面凉了

- python之 flask 框架(1)

- 【nginx实践连载-4】彻底卸载Nginx(Ubuntu)

- 「PHP系列」If...Else语句switch语句

- 22年国赛tomcat后续(653556547)群

- 蓝禾,三七互娱,顺丰,康冠科技,金证科技24春招内推

- 基于Spring Boot 3 + Spring Security6

- 数据结构第八弹---队列

- 快速入门Spring Data JPA

- 【大数据实时数据同步】超级详细的生产环境OGG(GoldenGate)

- Springboot中JUNIT5单元测试+Mockito详解

一、MapTask的数量由什么决定?

MapTask的数量由以下参数决定

- 文件个数

- 文件大小

- blocksize

一般而言,对于每一个输入的文件会有一个map split,每一个分片会开启一个map任务,很容易导致小文件问题(如果不进行小文件合并,极可能导致Hadoop集群资源雪崩)

hive中小文件产生的原因及解决方案见文章:

(14)Hive调优——合并小文件-CSDN博客文章浏览阅读779次,点赞10次,收藏17次。Hive的小文件问题

https://blog.csdn.net/SHWAITME/article/details/136108785

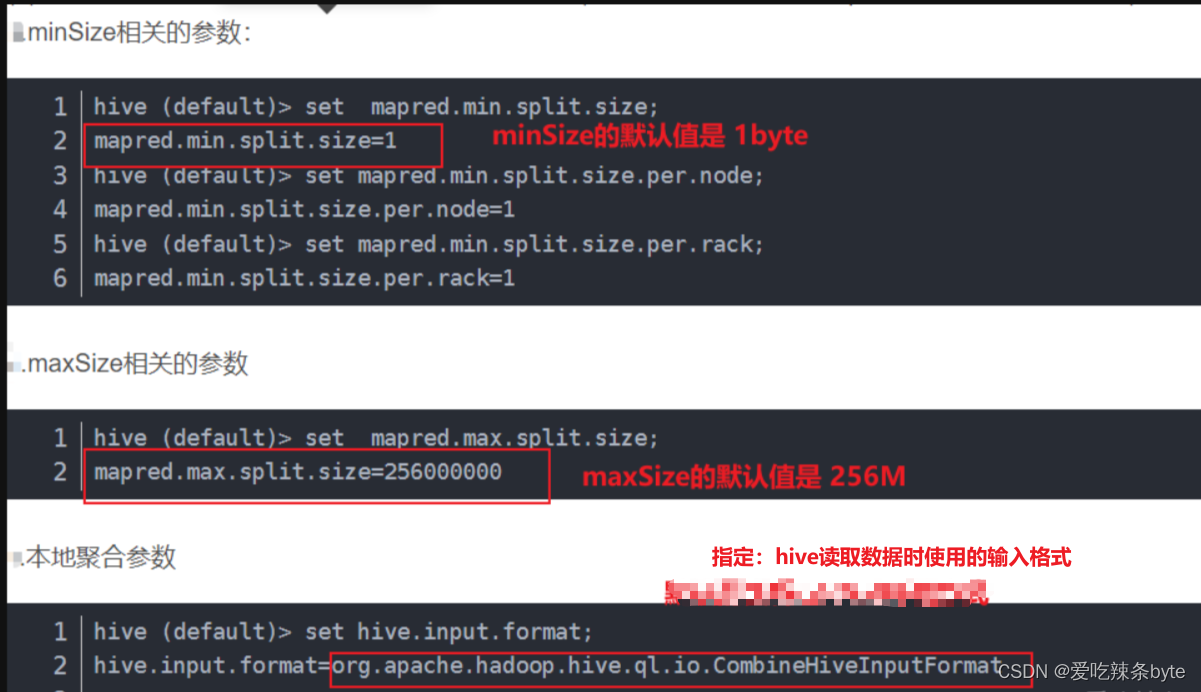

maxSize的默认值为256M,minSize的默认值是1byte,切片大小splitSize的计算公式:

splitSize=Min(maxSize,Max(minSize,blockSize)) = Min(256M,Max(1 byte ,128M)) = 128M =blockSize

所以默认splitSize就等于blockSize块大小

# minSize的默认值是1byte set mapred.min.split.size=1 #maxSize的默认值为256M set mapred.max.split.size=256000000 #hive.input.format是用来指定输入格式的参数。决定了Hive读取数据时使用的输入格式, set hive.input.format=org.apache.hadoop.hive.ql.io.CombineHiveInputFormat

二、如何调整MapTask的数量

假设blockSize一直是128M,且splitSize = blockSize = 128M。在不改变blockSize块大小的情况下,如何增加/减少mapTask数量

2.1 增加map的数量

增加map:需要调小maxSize,且要小于blockSize才有效,例如maxSize调成100byte

splitSize=Min(maxSize,Max(minSize,blockSize)) = Min(100,Max(1,128*1000*1000)) =100 byte = maxSize

调整前的map数 = 输入文件的大小/ splitSize = 输入文件的大小/ 128M

调整后的map数 = 输入文件的大小/ splitSize = 输入文件的大小/ 100byte

2.2 减少map的数量

减少map:需要调大minSize ,且要大于blockSize才有效,例如minSize 调成200M

splitSize=Min(maxSize,Max(minSize,blockSize)) = Min(256m, Max(200M,128M)) = 200M=minSize

调整前的map数 = 输入文件的大小/ splitSize = 输入文件的大小/ 128M

调整后的map数 = 输入文件的大小/ splitSize = 输入文件的大小/ 200M

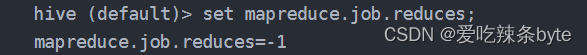

三、ReduceTask的数量决定

reduce的个数决定hdfs上落地文件的个数(即: reduce个数决定文件的输出个数)。 ReduceTask的数量,由参数mapreduce.job.reduces 控制,默认值为 -1 时,代表ReduceTask的数量是根据hive的数据量动态计算的。

总体而言,ReduceTask的数量决定方式有以下两种:

3.1 方式一:hive动态计算

3.1.1 动态计算公式

ReduceTask数量 = min (参数2,输入的总数据量/ 参数1)

参数1:hive.exec.reducers.bytes.per.reducer

含义:每个reduce任务处理的数据量(默认值:256M)

参数2:hive.exec.reducers.max

含义:每个MR任务能开启的reduce任务数的上限值(默认值:1009个)

ps: 一般参数2的值不会轻易变动,因此在普通集群规模下,hive根据数据量动态计算reduce的个数,计算公式为:输入总数据量/hive.exec.reducers.bytes.per.reducer

3.1.2 源码分析

(1)通过源码分析 hive是如何动态计算reduceTask的个数的?

在org.apache.hadoop.hive.ql.exec.mr包下的 MapRedTask类中 //方法类调用逻辑 MapRedTask | ----setNumberOfReducers | ---- estimateNumberOfReducers |---- estimateReducers

(2)核心方法setNumberOfReducers(读取 用户手动设置的reduce个数)

/** * Set the number of reducers for the mapred work. */ private void setNumberOfReducers() throws IOException { ReduceWork rWork = work.getReduceWork(); // this is a temporary hack to fix things that are not fixed in the compiler // 获取通过外部传参设置reduce数量的值 rWork.getNumReduceTasks() Integer numReducersFromWork = rWork == null ? 0 : rWork.getNumReduceTasks(); if (rWork == null) { console .printInfo("Number of reduce tasks is set to 0 since there's no reduce operator"); } else { if (numReducersFromWork >= 0) { //如果手动设置了reduce的数量 大于等于0 ,则进来,控制台打印日志 console.printInfo("Number of reduce tasks determined at compile time: " + rWork.getNumReduceTasks()); } else if (job.getNumReduceTasks() > 0) { //如果手动设置了reduce的数量,获取配置中的值,并传入到work中 int reducers = job.getNumReduceTasks(); rWork.setNumReduceTasks(reducers); console .printInfo("Number of reduce tasks not specified. Defaulting to jobconf value of: " + reducers); } else { //如果没有手动设置reduce的数量,进入方法 if (inputSummary == null) { inputSummary = Utilities.getInputSummary(driverContext.getCtx(), work.getMapWork(), null); } // #==========【重中之中】estimateNumberOfReducers int reducers = Utilities.estimateNumberOfReducers(conf, inputSummary, work.getMapWork(), work.isFinalMapRed()); rWork.setNumReduceTasks(reducers); console .printInfo("Number of reduce tasks not specified. Estimated from input data size: " + reducers); } //hive shell中所看到的控制台打印日志就在这里 console .printInfo("In order to change the average load for a reducer (in bytes):"); console.printInfo(" set " + HiveConf.ConfVars.BYTESPERREDUCER.varname + "="); console.printInfo("In order to limit the maximum number of reducers:"); console.printInfo(" set " + HiveConf.ConfVars.MAXREDUCERS.varname + "= "); console.printInfo("In order to set a constant number of reducers:"); console.printInfo(" set " + HiveConf.ConfVars.HADOOPNUMREDUCERS + "= "); } } (3)如果没有手动设置reduce的个数,hive是如何动态计算reduce个数的?

int reducers = Utilities.estimateNumberOfReducers(conf, inputSummary, work.getMapWork(), work.isFinalMapRed()); /** * Estimate the number of reducers needed for this job, based on job input, * and configuration parameters. * * The output of this method should only be used if the output of this * MapRedTask is not being used to populate a bucketed table and the user * has not specified the number of reducers to use. * * @return the number of reducers. */ public static int estimateNumberOfReducers(HiveConf conf, ContentSummary inputSummary, MapWork work, boolean finalMapRed) throws IOException { // bytesPerReducer 每个reduce处理的数据量,默认值为256M BYTESPERREDUCER("hive.exec.reducers.bytes.per.reducer", 256000000L) long bytesPerReducer = conf.getLongVar(HiveConf.ConfVars.BYTESPERREDUCER); //整个mr任务,可以开启的reduce个数的上限值:maxReducers的默认值1009个MAXREDUCERS("hive.exec.reducers.max", 1009) int maxReducers = conf.getIntVar(HiveConf.ConfVars.MAXREDUCERS); //#===========对totalInputFileSize的计算 double samplePercentage = getHighestSamplePercentage(work); long totalInputFileSize = getTotalInputFileSize(inputSummary, work, samplePercentage); // if all inputs are sampled, we should shrink the size of reducers accordingly. if (totalInputFileSize != inputSummary.getLength()) { LOG.info("BytesPerReducer=" + bytesPerReducer + " maxReducers=" + maxReducers + " estimated totalInputFileSize=" + totalInputFileSize); } else { LOG.info("BytesPerReducer=" + bytesPerReducer + " maxReducers=" + maxReducers + " totalInputFileSize=" + totalInputFileSize); } // If this map reduce job writes final data to a table and bucketing is being inferred, // and the user has configured Hive to do this, make sure the number of reducers is a // power of two boolean powersOfTwo = conf.getBoolVar(HiveConf.ConfVars.HIVE_INFER_BUCKET_SORT_NUM_BUCKETS_POWER_TWO) && finalMapRed && !work.getBucketedColsByDirectory().isEmpty(); //#==============【真正计算reduce个数的方法】看源码的技巧return的方法是重要核心方法 return estimateReducers(totalInputFileSize, bytesPerReducer, maxReducers, powersOfTwo); }(4) 动态计算reduce个数的方法 estimateReducers

public static int estimateReducers(long totalInputFileSize, long bytesPerReducer, int maxReducers, boolean powersOfTwo) { double bytes = Math.max(totalInputFileSize, bytesPerReducer); // 假设totalInputFileSize 1000M // bytes=Math.max(1000M,256M)=1000M int reducers = (int) Math.ceil(bytes / bytesPerReducer); //reducers=(int)Math.ceil(1000M/256M)=4 此公式说明如果totalInputFileSize 小于256M ,则reducers=1 ;也就是当输入reduce端的数据量特别小,即使手动设置reduce Task数量为5,最终也只会开启1个reduceTask reducers = Math.max(1, reducers); //Math.max(1, 4)=4 ,reducers的结果还是4 reducers = Math.min(maxReducers, reducers); //Math.min(1009,4)=4; reducers的结果还是4 int reducersLog = (int)(Math.log(reducers) / Math.log(2)) + 1; int reducersPowerTwo = (int)Math.pow(2, reducersLog); if (powersOfTwo) { // If the original number of reducers was a power of two, use that if (reducersPowerTwo / 2 == reducers) { // nothing to do } else if (reducersPowerTwo > maxReducers) { // If the next power of two greater than the original number of reducers is greater // than the max number of reducers, use the preceding power of two, which is strictly // less than the original number of reducers and hence the max reducers = reducersPowerTwo / 2; } else { // Otherwise use the smallest power of two greater than the original number of reducers reducers = reducersPowerTwo; } } return reducers; }3.2 方式二:用户手动指定

手动调整reduce个数: set mapreduce.job.reduces = 10

需要注意:出现以下几种情况时,手动调整reduce个数不生效。

3.2.1 order by 全局排序

sql中使用了order by全局排序,那只能在一个reduce中完成,无论怎么调整reduce的数量都是无效的。

hive (default)>set mapreduce.job.reduces=5; hive (default)> select * from empt order by length(ename); Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

3.2.2 map端输出的数据量很小

在【3.1.2 源码分析——(4) 】动态计算reduce个数的核心方法 estimateReducers中,有下面这三行代码:

int reducers = (int) Math.ceil(bytes / bytesPerReducer); reducers = Math.max(1, reducers); reducers = Math.min(maxReducers, reducers);

如果map端输出的数据量bytes (假如只有1M) 远小于hive.exec.reducers.bytes.per.reducer (每个reduce处理的数据量默认值为256M) 参数值,maxReducers默认为1009个,计算下列值:

int reducers = (int) Math.ceil(1 / 256M)=1; reducers = Math.max(1, 1)=1; reducers = Math.min(1009, 1)=1;

此时即使用户手动 set mapreduce.job.reduces=10,也不生效,reduce个数最后还是只有1个。

参考文章:

Hive mapreduce的map与reduce个数由什么决定?_hive中map任务和reduce任务数量计算原理-CSDN博客