- VMware虚拟机桥接、NAT、仅主机三种网络模式的配置详解

- plsql developer 一键格式化sql美化sql

- SpringBoot使用OpenCV

- 掌握Go语言:Go语言类型转换,无缝处理数据类型、接口和自定义类型的转

- golang工程——opentelemetry简介、架构、概念、追踪原

- 2023 最新版IntelliJ IDEA 2023.1创建Java

- Python+Mysql实现登录注册

- SpringBoot的 ResponseEntity类讲解(具体讲解返

- 前馈神经网络解密:深入理解人工智能的基石

- Spring Cloud LoadBalancer 负载均衡策略与缓存

- 数据库Part1:关系数据库标准语言SQL(完整版)

- 92款超级漂亮的css按钮样式 复制即用

- MySQL- 创建可以远程访问的root账户

- 抖音六神最新算法

- 【粉丝福利社】《AIGC重塑金融:AI大模型驱动的金融变革与实践》(文

- 简单爬虫:东方财富网股票数据爬取(20231230)

- Spring Boot集成百度UidGenerator雪花算法使用以及

- Prometheus实战篇:Prometheus监控nginx

- 【AI云原生】Kubernetes容器环境下大模型训练和推理的关键技术

- 一篇文章掌握SpringCloud与SpringCloud Aliba

- 详细分析Mysql中的LOCATE函数(附Demo)

- JavaSpring Boot + POI 给 Word 添加水印

- Go函数全景:从基础到高阶的深度探索

- Python江苏南京二手房源爬虫数据可视化系统设计与实现

- 【数据结构(C语言)】树、二叉树详解

- Springboot之自定义注解

- 革新鞋服零售:数据驱动的智能商品管理 解锁库存优化与高效增长

- Node.js版本升级,修改模块默认的保存位置

- 如果项目在上线后才发现Bug怎么办?

- SpringCloudGateway之统一鉴权篇

hadoop 3.1.3 安装记录

- linux 安装

- VMware

- 新建虚拟机向导

- VMware的网络配置

- centos 7.5

- 安装

- 设置服务器

- 使用windTerm 连接服务器 (使用shell工具即可)

- jdk和hadoop安装

- 上传和准备

- 配置环境

- hadoop环境配置

- 拷贝服务器及快捷脚本

- 拷贝以及设置服务器

- 设置分发脚本

- **done!&start!**

- 常用端口

linux 安装

使用centos7.5 DVD

环境虚拟机 VMware

jdk1.8

hadoop 3.1.3

VMware

新建虚拟机向导

- 选择典型

- 稍后安装操作系统

- linux 版本centOS7 64位

- 虚拟机名称 hadoop11

- 位置 d:\dev\hadoop11

- 磁盘大小 50

- 将虚拟磁盘拆分成多个文件

- 自定义硬件 内存 4GB(根据实际情况定)

- 处理器数量2 每个处理器的内核数量2 (根据实际情况定)

- 新CD/DVD 使用ISO镜像文件 选择centos7.5 DVD

- Done

- 开启此虚拟机

VMware的网络配置

- 编辑 虚拟网络编辑器

- 选择NAT 模式 修改子网IP192.168.【挑个吉祥数】.0

- NAT设置 网管IP改成【吉祥数】

- 修改vm对应的网卡的 internet协议版本 4 (TCP/IPv4)

ip地址 192.168.【吉祥数】.1

子网掩码 255.255.255.0

默认网关 192.168.【吉祥数】.2

首选DNS 192.168.【吉祥数】.2

吉祥数是10,实际根据环境判断,避免占用

- 完事

centos 7.5

安装

- 本地化 设置当前时间

- 软件安装 最小安装 【已选开发环境的附加选项】全钩上

- 安装位置 其他存储选项 我要配置分区

- 标准分区

- + 挂载点 /boot 1G 添加挂载点 文件系统ext4

- + 挂载点 /swap 4G 添加挂载点 文件系统swap

- + 挂载点 / 45G 添加挂载点 文件系统ext4

- 完成标准分区设置

- KDUMP [在标准分区旁边] 不启用 kdump

- 网络和主机名称 打开以太网 设置主机名hadoop11 点击应用 【完成】

- SECURITY POLICY 【No content found】

- [开始安装]ing

- 设置密码和用户

- 安装工具

yum install -y epel-release yum install -y vim yum install -y net-tools yum install -y ntp

设置服务器

- 给用户赋root权限

vim /etc/sudoers

- user 修改成linux安装时候设置的用户名

- ⚠️注意!注意!注意!

需要增加在 %wheel ALL=(ALL) ALL 后面

注意:user这一行不要直接放到root行下面,因为所有用户都属于wheel组,你先配置了user具有免密功能,但是程序执行到%wheel行时,该功能又被覆盖回需要密码。所以user要放到%wheel这行下面。

#找到 %wheel ALL=(ALL) ALL 添加在该行下面 user ALL=(ALL) NOPASSWD:ALL

- 设置hostname

vim /etc/hostname

hadoop11

- 设置hosts

vim /etc/hosts

192.168.10.11 hadoop11 192.168.10.12 hadoop12 192.168.10.13 hadoop13

- 关闭防火墙

systemctl stop firewalld systemctl disable firewalld.service

- 卸载 自带jdk 【最小安装无jdk】

rpm -qa:查询所安装的所有rpm软件包

grep -i:忽略大小写

xargs -n1:表示每次只传递一个参数

rpm -e –nodeps:强制卸载软件

rpm -qa | grep -i java | xargs -n1 rpm -e --nodeps

- 虚拟网络ip设置

- su root权限

vim /etc/sysconfig/network-scripts/ifcfg-ens33

- #IP的配置方法[none|static|bootp|dhcp](引导时不使用协议|静态分配IP|BOOTP协议|DHCP协议)

BOOTPROTO=“static”

- #IP地址

IPADDR=192.168.10.11

- #网关

GATEWAY=192.168.10.2

- #域名解析器

DNS1=192.168.10.2

TYPE="Ethernet" PROXY_METHOD="none" BROWSER_ONLY="no" BOOTPROTO="static" DEFROUTE="yes" IPV4_FAILURE_FATAL="no" IPV6INIT="yes" IPV6_AUTOCONF="yes" IPV6_DEFROUTE="yes" IPV6_FAILURE_FATAL="no" IPV6_ADDR_GEN_MODE="stable-privacy" NAME="ens33" UUID="ec201efe-1f94-46ab-b276-50022ceee6e8" DEVICE="ens33" ONBOOT="yes" IPADDR=192.168.10.11 GATEWAY=192.168.10.2 DNS1=192.168.10.2

保存后重启

reboot

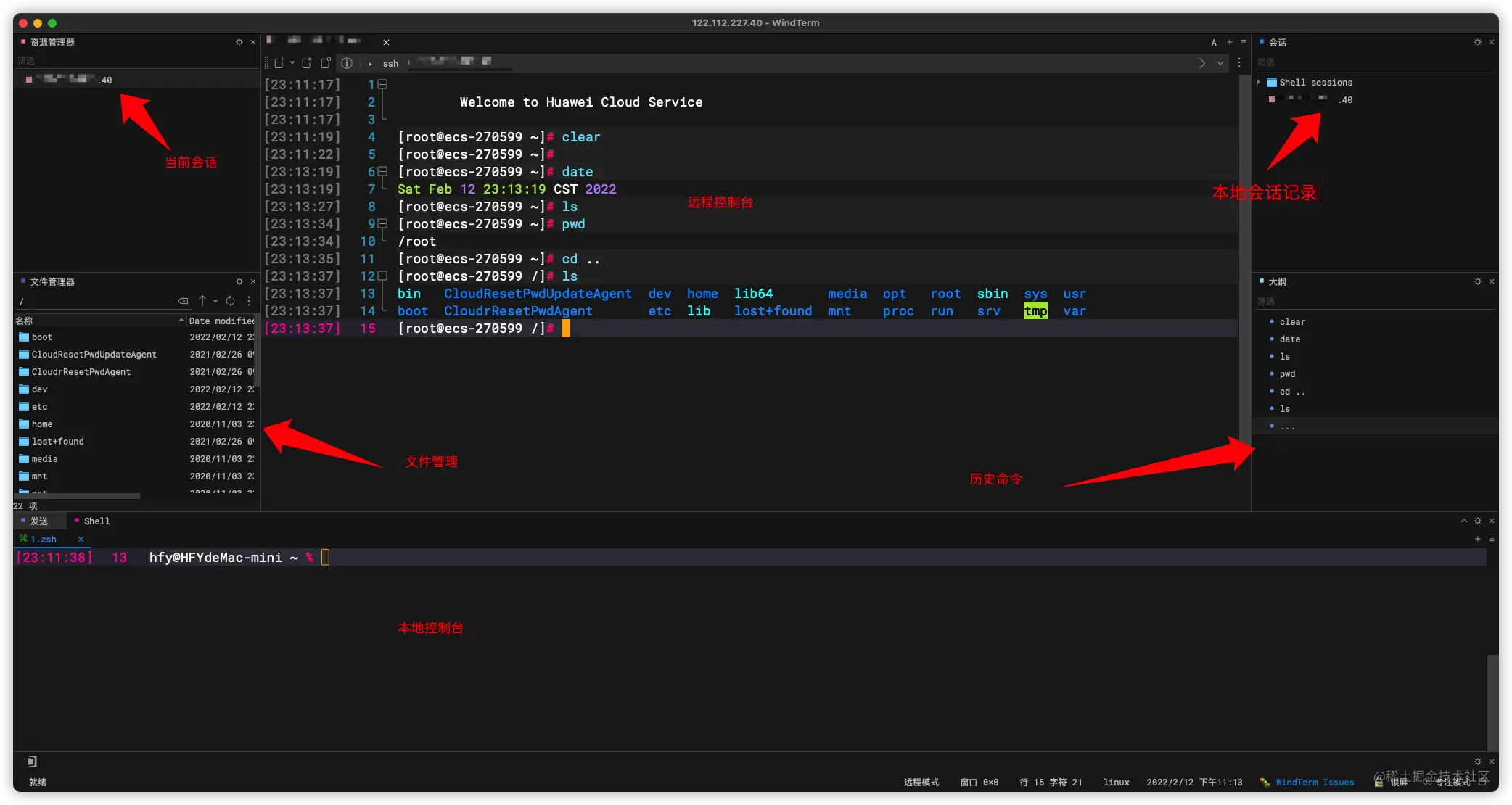

使用windTerm 连接服务器 (使用shell工具即可)

- 自行下载WindTerm github 建议百度找资源

- 新建回话 主机192.168.10.11

- 后续操作使用windTerm 操作

jdk和hadoop安装

上传和准备

- 在opt下创建module和software

mkdir /opt/module mkdir /opt/software

- 使用windTerm 中使用文件管理 进入 /opt/software/

- 将jdk1.8 和hadoop 3.1.3上传到服务器的software目录下

- 分别将jdk和hadoop解压缩到module 目录下

tar -zxvf jdk-8u212-linux-x64.tar.gz -C /opt/module/ tar -zxvf hadoop-3.1.3.tar.gz -C /opt/module/

配置环境

vim /etc/profile

- 在末尾添加【环境变量】

- 也可以通过在profile.d目录下my_env.sh,在该文件中配置环境变量【环境变量】

vim /etc/profile.d/my_env.sh

- 【环境变量】

#JAVA_HOME export JAVA_HOME=/opt/module/jdk1.8.0_212 export PATH=$PATH:$JAVA_HOME/bin #HADOOP_HOME export HADOOP_HOME=/opt/module/hadoop-3.1.3 export PATH=$PATH:$HADOOP_HOME/bin export PATH=$PATH:$HADOOP_HOME/sbin

- 重载配置文件

source /etc/profile

- 测试是否OK

jps java -version

hadoop环境配置

192.168.10.11 hadoop11是主节点

192.168.10.12 hadoop12是Yarn节点

192.168.10.13 hadoop13是从节点

- 以下操作均需要进入配置的文件所在的目录:$HADOOP_HOME/etc/hadoop/

cd /opt/module/hadoop-3.1.3/etc/hadoop

- core-site.xml 设置NameNode、存储目录、以及机群启动后通过web页面管理的操作权限。

- 注意“【user】”需要替换成linux的用户,就是上文赋过root权限的用户

vim core-site.xml

fs.defaultFS hdfs://hadoop11:8020 hadoop.tmp.dir /opt/module/hadoop-3.1.3/data hadoop.http.staticuser.user ocken - hdfs-site.xml 指定主从服务

vim hdfs-site.xml

dfs.namenode.http-address hadoop11:9870 dfs.namenode.secondary.http-address hadoop13:9868 - yarn-site.xml 指定YARN服务器

vim yarn-site.xml

yarn.nodemanager.aux-services mapreduce_shuffle yarn.resourcemanager.hostname hadoop12 yarn.nodemanager.env-whitelist JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME yarn.log-aggregation-enable true yarn.log.server.url http://hadoop11:19888/jobhistory/logs yarn.log-aggregation.retain-seconds 604800 - mapred-site.xml

vim mapred-site.xml

mapreduce.framework.name yarn mapreduce.jobhistory.address hadoop11:10020 mapreduce.jobhistory.webapp.address hadoop11:19888 - workers 不可以有空格和缩进

vim workers

hadoop11 hadoop12 hadoop13

拷贝服务器及快捷脚本

拷贝以及设置服务器

- 使用VMware 右键电源 关机 右键快照 拍摄快照 日期

- 右键 管理 克隆 下一步 虚拟机中当状态 下一步 创建完整克隆

- 虚拟机名称 分别是hadoop12 和 hadoop13 目录看自己

- 修改每台机器的hostname和ifcfg-ens33文件。

vim /etc/hostname vim /etc/sysconfig/network-scripts/ifcfg-ens33

- reboot

- 在windterm分别增加会话。

设置分发脚本

- 设置ssh免密登录,分别在三台服务器上执行一下操作。

ssh-keygen -t rsa ssh-copy-id hadoop11 ssh-copy-id hadoop12 ssh-copy-id hadoop13

- 了解一下

scp -r $pdir/$fname $user@$host:$pdir/$fname

命令 递归 要拷贝的文件路径/名称 目的地用户@主机:目的地路径/名称

rsync -av $pdir/$fname $user@$host:$pdir/$fname

命令 选项参数 要拷贝的文件路径/名称 目的地用户@主机:目的地路径/名称

- xsync 脚本

sudo vim /home/bin/xsync

#!/bin/bash #1. 判断参数个数 if [ $# -lt 1 ] then echo Not Enough Arguement! exit; fi #2. 遍历集群所有机器 for host in hadoop11 hadoop12 hadoop13 do echo ==================== $host ==================== #3. 遍历所有目录,挨个发送 for file in $@ do #4. 判断文件是否存在 if [ -e $file ] then #5. 获取父目录 pdir=$(cd -P $(dirname $file); pwd) #6. 获取当前文件的名称 fname=$(basename $file) ssh $host "mkdir -p $pdir" rsync -av $pdir/$fname $host:$pdir else echo $file does not exists! fi done done- 赋权

【user】替换成你服务器用户名称

sudo chmod 777 xsync

cd /home/【user】/bin sudo vim myhadoop.sh

#!/bin/bash if [ $# -lt 1 ] then echo "No Args Input..." exit ; fi case in "start") echo " =================== 启动 hadoop集群 ===================" echo " --------------- 启动 hdfs ---------------" ssh hadoop11 "/opt/module/hadoop-3.1.3/sbin/start-dfs.sh" echo " --------------- 启动 yarn ---------------" ssh hadoop12 "/opt/module/hadoop-3.1.3/sbin/start-yarn.sh" echo " --------------- 启动 historyserver ---------------" ssh hadoop11 "/opt/module/hadoop-3.1.3/bin/mapred --daemon start historyserver" ;; "stop") echo " =================== 关闭 hadoop集群 ===================" echo " --------------- 关闭 historyserver ---------------" ssh hadoop11 "/opt/module/hadoop-3.1.3/bin/mapred --daemon stop historyserver" echo " --------------- 关闭 yarn ---------------" ssh hadoop12 "/opt/module/hadoop-3.1.3/sbin/stop-yarn.sh" echo " --------------- 关闭 hdfs ---------------" ssh hadoop11 "/opt/module/hadoop-3.1.3/sbin/stop-dfs.sh" ;; *) echo "Input Args Error..." ;; esac- 给脚本赋权

sudo chmod +x myhadoop.sh

-设置查询hadoop脚本

sudo vim jpsall

#!/bin/bash for host in hadoop11 hadoop12 hadoop13 do echo =============== $host =============== ssh $host jps donesudo chmod 777 jpsall

- 分发该脚本

在分发xsync的时候,一定到bin目录下,避免同步.ssh文件🥸

xsync /home/【user】/bin/*

done!&start!

使用linux【user】用户进行一下操作

- 分别在三台服务器上初始化

hdfs namenode -format

- 启停服务器

#启动服务器 myhadoop.sh start #停止服务器 myhadoop.sh stop #查看服务器 jpsall #输出一下内容就OK了 =============== hadoop11 =============== 5379 JobHistoryServer 4915 DataNode 5204 NodeManager 4760 NameNode 5448 Jps =============== hadoop12 =============== 7282 ResourceManager 7747 Jps 7592 NodeManager 7098 DataNode =============== hadoop13 =============== 5040 NodeManager 5147 Jps 4843 DataNode 4956 SecondaryNameNode

- hdfs常用命令

例如

#创建文件夹 hadoop fs -mkdir /input #将jdk移入input hadoop fs -put /opt/software/jdk-8u212-linux-x64.tar.gz /input

常用端口

- MapReduce查看执行任务端口 http://hadoop12:8088/cluster

- NameNode HTTP UI http://hadoop11:9870/

- 历史服务器通信端口 http://hadoop11:19888/jobhistory

- NameNode内部通信端口8082

本文依据尚硅谷大数据Hadoop教程,hadoop3.x搭建到集群调优,百万播放内容整理,感谢感谢感谢海哥

- hdfs常用命令

- 启停服务器

- 分别在三台服务器上初始化

- 分发该脚本

- 给脚本赋权

- 赋权

- 了解一下

- 设置ssh免密登录,分别在三台服务器上执行一下操作。

- workers 不可以有空格和缩进

- mapred-site.xml

- yarn-site.xml 指定YARN服务器

- hdfs-site.xml 指定主从服务

- 以下操作均需要进入配置的文件所在的目录:$HADOOP_HOME/etc/hadoop/

- 测试是否OK

- 重载配置文件

- 【环境变量】

- 在opt下创建module和software

- #IP的配置方法[none|static|bootp|dhcp](引导时不使用协议|静态分配IP|BOOTP协议|DHCP协议)

- 卸载 自带jdk 【最小安装无jdk】

- 关闭防火墙

- 设置hosts

- 设置hostname

- 给用户赋root权限